Why did I train a neural net with comic books and cereal?

First thing’s first.

Before we get started on this adventure, we should probably talk about the chronology of this site. There is none.

I hope it isn’t too jarring but I’m just going to post projects whenever the heck I remember them, and certainly not in the order they occurred. Frankly, even the act remembering is a bit of a miracle, so just be happy that I post anything at all. However, all that said, this particular topic does happen to be the bright shiny thing I am currently chasing. So, what is it?

You guessed it. Time Machines! Wait.. no.. it’s Neural nets.

Why neural nets?

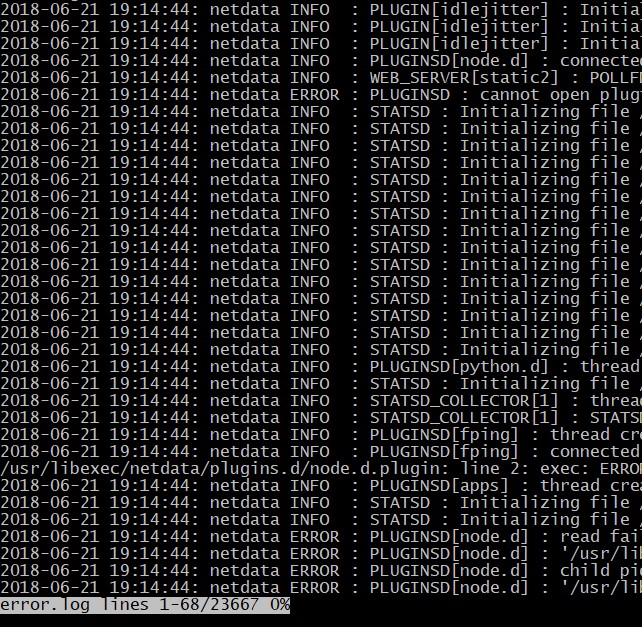

You see, since the early 1990’s I’ve been doing nerd work. And a big part of nerd work is log files. Loads and loads and loads of log files. So many log files that you definitely can’t read them all. But, if you don’t read any of the logs, you will eventually get caught off-guard.

To avoid this, I’ve been using regular expressions to filter my logs and e-mail the interesting bits to myself. I can’t just e-mail all of the logs to myself because, as I mentioned, there are way too many of them. Also, I don’t have the time or inclination to read all of the other e-mail I receive each day. Seriously. Stop sending me e-mail. Better yet, let’s BAN ALL E-MAIL!

No? Well, maybe some day. A guy can dream.

In the interim, and until we do live in this perfect e-mail-free world, I need to reduce the constant e-mail noise generated by servers, routers, firewalls, etc. To do this I use regular expressions to classify log entries. I have expressions for normal stuff (which is filtered out before I see it), bad stuff (which alerts me via e-mail) and worse stuff (which will send e-mail in ALL CAPS or something). Anything else that doesn’t match any of these expressions gets looked into at a later date to help me tune the expressions.

It’s not an ideal system but it’s better than nothing.

NOTE: If you are a system owner currently doing nothing, I hate you.

What can we use to fix this imperfect solution? You guessed it! Robots! Err… machine learning.

I mean, surely, if I train a neural net with buckets of good logs and bad logs, it can learn the difference and eventually be able to make decisions for me. Right?

Look, I just want a robot to do my job for me. Is that too much to ask?

No. No it’s not. I deserve a robot helper. I deserve one and no one is going to just give me one so it’s time to create one myself. But, how the hell do I do that?

How to create a neural net

After a bit panic googling, I found Amazon Sagemaker. This seemed like a good place to start. So I read some articles and did some labs and quickly decided that I was going to have to a) get a whole lot smarter really quickly or b) invest a whole lot of time that I didn’t have. This was discouraging.

But luckily the discouragement didn’t last long (this time I avoided the Swamp of Sorrows). Instead, my brain said, “Well, if it’s too much work to analyze text and make a decision, how about we just train a model to generate text. Super fun text! Something cool like… uh… comic books!”

Hey, yeah! Comic books are cool. I like comic books. Sure, let’s generate some fake comic book titles.

Well, It turns out that there are loads of ways to generate text with machine learning. I chose to use textgenrnn because I quickly found this pre-created Google Colab notebook that makes it super easy!

Now with comic books as my new focus, I pivoted and started training two models. I fed one of the models a list of every Marvel/DC comic book title. (roughly 35,000 titles) I fed the other model story names from those same 35,000 titles (which ended up being something like 300,000 total story names). The bots were fed and chock-full of comic book knowledge. Time to do damage.

NOTE: I got both title and story names from the Grand Comic Database, which is amazing and you should support it.

Once I got to the point where the models were reliably generating things that sounded like plausible comic book titles (“Batman: Ultimate Spider-Man”) and story names (“The Secret of the Sinister Secrets!”), option paralysis set in. Now what do I do?

Frankly, I’m still not sure. But hopefully I will come back to this and maybe create a twitter bot that will generate a comic book title name and story name, then do a google image search using that same text and finally generate a fake comic book cover image and tweet it out. We’ll see.

But I need something to do RIGHT NOW!

My brain must have sensed that I was dangerously close to the Swamp of Sorrows so it panicked and shouted “Cereal! Do something with breakfast cereal!”

NOTE: In this analogy my brain is a 5th grader who forgot he was scheduled to do a book report and is on the verge of tears.

Lucky for the troubled 5th grader (err… me, I guess), the distraction worked. It seemed like it would be hilarious to mash-up comic book titles and breakfast cereal names. I mean, I already had a bot that generated comic book titles. Creating another bot that did the same thing for breakfast cereals seemed trivial. After I had both, how hard could it possibly be to just mash them together somehow?

It was hard.

Look. I’m not doing science here, I’m doing alchemy. I barely understand the python neural net architectures I’m using. Scratch that. I don’t understand them at all. As such, I’m definitely not equipped to improvise on these architectures in code.

I am, however, equipped to fake it. So to the faking I did go! I grabbed the 600-ish cereal names and repeated them in a text file until I had roughly 35,000 total lines. Then I spliced this file with another file that contained 35,000 comic book titles.

NOTE: I used the Linux paste command for this

This gave me a 70,000 line file that was roughly a 50/50 mix of comic book titles and cereal names. Perfect food for a neural net that would, hopefully, produce hilarious mash-ups.

At this point I raced back to my Google Colab notebok and told it run through this file 100 times and learn from it. I assumed I could come back to find something hilarious like “Super Shredded Spider Bran“.

I assumed wrongly.

to be continued in Part 2