My neural net isn’t funny… yet.

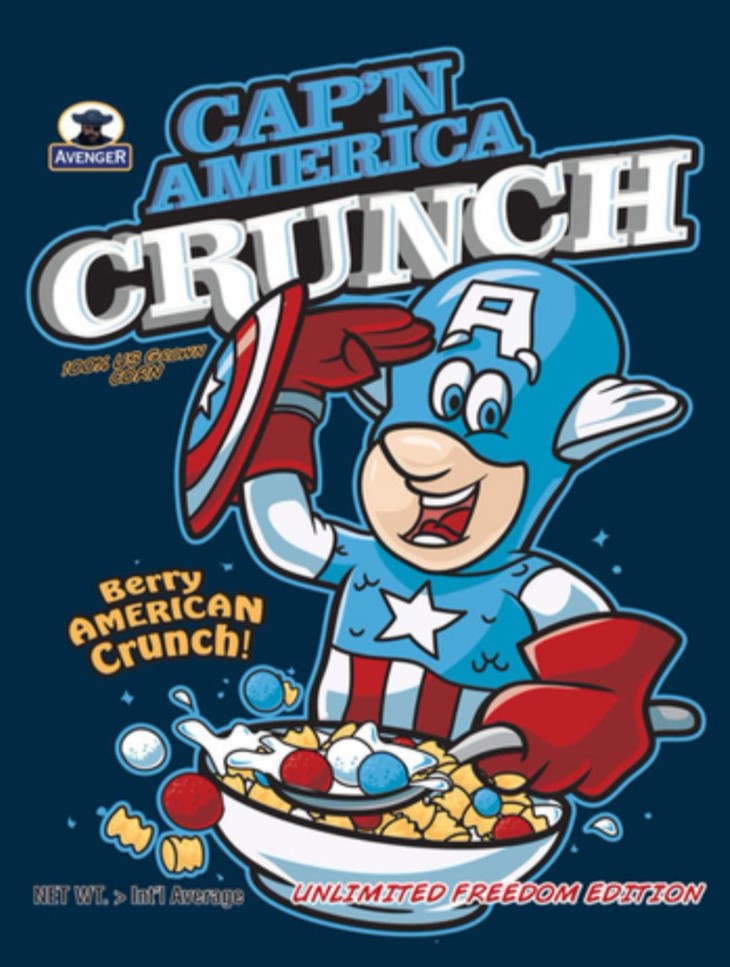

If you read part 1 of this post (and why wouldn’t do you that?) you’ll remember that I had just created a neural net using textgenrnn and Google Colab and then fed it 70,000 lines of breakfast cereal names and comic book titles. I wanted something like:

Captain America Berries – Whoops All ‘Merica!

Instead, what I got was alternating lines of comic book names and breakfast cereals. Never did the two mix. So, stuff like this:

Frosted Krispies (Kellogg’s)

The Best of Death and the Savage World Weird Worse Comics Stories

Sin City and the Call

Chocolatey Peanut Butter Crunch (Quaker Oats)

Quaker Cap'n Crunch Crunchberries

Corn Flakes (Erewhon)

Nick Fury's WarBOOOOORING!

So, after some dinking around I figured out what was going wrong. Long story short, the bot was too smart to be funny. Basically, it was considering too many nearby tokens (in my case, characters) to determine what to predict. This meant it was going to take a LONG time to make the funnies because there isn’t a lot of overlap here. I mean, words like “super” and “captain” would have got us there eventually I think. But I couldn’t wait for that. So, instead, I just made the bot stupider.

Here are the nerdy deets:

model_cfg = {

'word_level': False,

'rnn_size': 128,

'rnn_layers': 3,

'rnn_bidirectional': True,

'max_length': 1,

'max_words': 100000,

}

That max_length variable was set to 20 or 30. So, again, acting more as alchemist than scientist, I just cranked that sonofagun down to 1.

I then trained the model with this setting for 300+ epochs. The training configuration I was using would spit out some example text after every 10 epochs (NOTE: an epoch is basically one pass through your training data). I was starting to see some crossover between comic book and cereal names and, better yet, some of it was funny(-ish). It wasn’t all comedy gold, but there were definitely some nuggets in there.

With the model trained to this somewhat amusing level, I generated 3000 lines of output and curated it. I wanted to be very careful with the curation because I didn’t want the neural net to parrot my own ideas back at me. It’s way more fun to be surprised when the bot is hilarious. So, I decided that to do that somewhat impartially I needed ground rules.

Shane’s rules to nurture the AI

- Cull the boring stuff: if the neural net gives me “Raisin Bran” I don’t need to reward that behavior. Raisin Bran isn’t funny.

- Correct the typos: if the bot gives me “Batman: The Brave and the Otas” it’s ok to change Otas to Oats. It’s obvious what the funny robot meant.

- Don’t over-correct the typos: if auto-correct would never suggest the word replacement that pops in my brain, I don’t get to use it, even if it’s hilarious.

- Cull the garbage: if the text has more than 2 typos, I throw the whole line out. Even if it seems like it could be hilarious if I “creatively corrected” the typos.

- Keep the random shit: sometimes I would get results that didn’t seem to be comic books or cereals. Things like “Don’t Be Petters“. I decided to reward the absurd, so this stuff stays in.

- Keep funny typos: If there is a word that could be a funny cereal or superhero name, go ahead and keep it. For example “Almond Delight Wheat Sweeten Batties” just rolls off of the tongue and definitely feels like a superhero cereal I would enjoy. So, it stays.

I applied these rules to 3,000 lines out of output and whittled it down to roughly 300 lines. Here are some snippets:

Granola -How Fat with Raisins (Healthy Choice - Kellogg's)

Tony’s Tunch Cinnamon Cereal (Kellogg’s)

Bomb Queenity

Granola Low Fat wolf (General Mills)

All-American Spike

Avengers, Hearts Patrol

Bottle of the Face

Breade (Kellogg’s)

The Marvel Universe Comics Dick Files 2015

Cartoon Battle Shisters

Tomb Raisin Bran

Batman / Batman Occult Apples

Hawkman: Crunchy & Nick FlightSo, what’s next?

Well, obviously I fed those 300 moderately humorous lines back to the neural net and generated another 3000 lines output. Then I whittled that output down to roughly 1800 lines and fed it back to the bot. Based on the text I was seeing during the training output, it seemed like I was in a real good place. So, I generated 1000 lines of output and double-clicked the downloaded text file.